Our Thoughts on Large Language Models (LLMs)

By the Rocketship team

Launching dreams to space,

Rocketship soars with vision,

Investing in stars.

Rocketship.vc described as a haiku by ChatGPT

While we start this blog post with a haiku written by ChatGPT, please be assured that the rest of this blog was authored by humans! Such is the excitement, opportunity, and risk of this disruptive new technology.

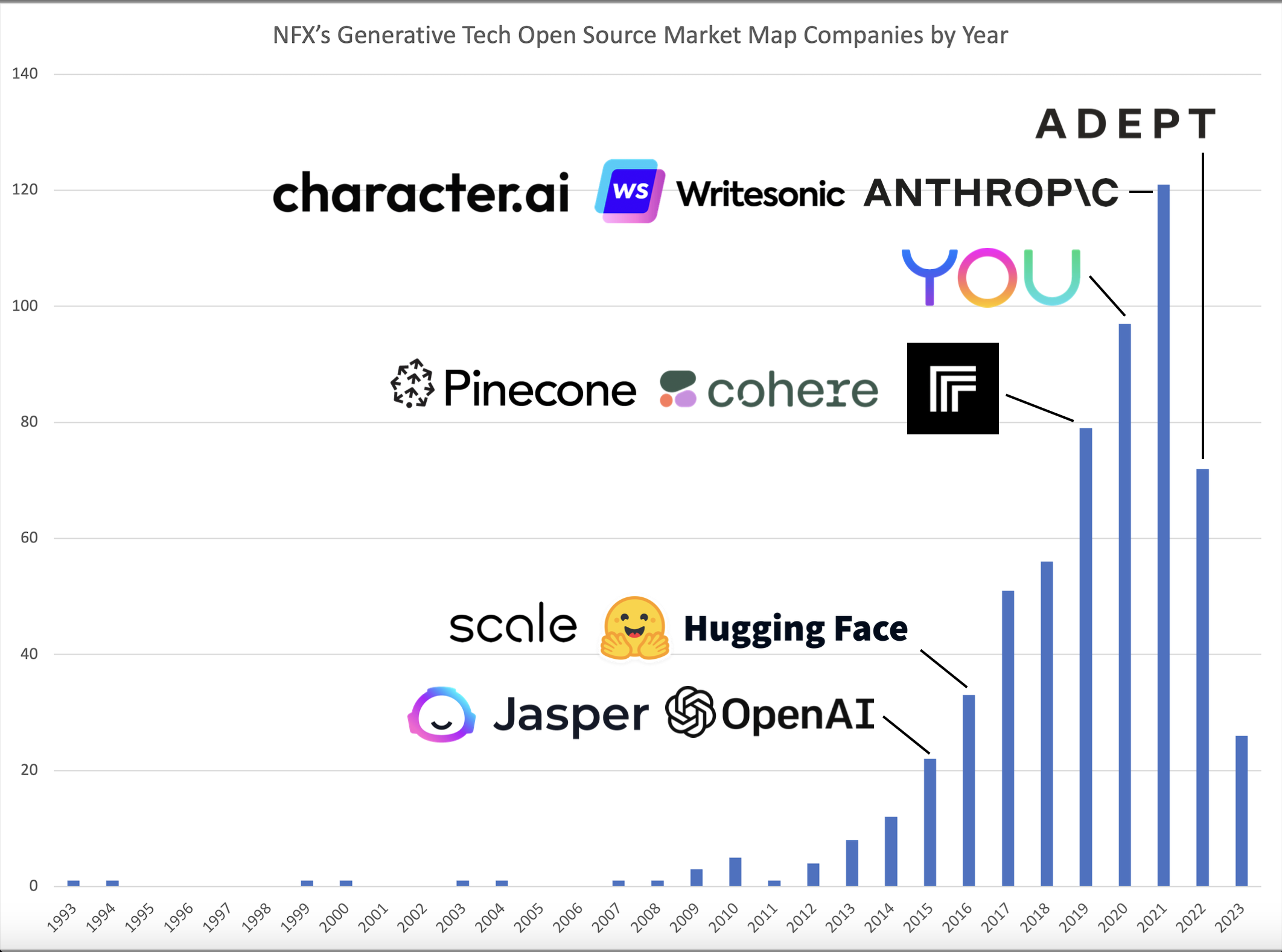

This excitement and opportunity is reflected in the companies we are seeing identified by our models. Our investing approach differs from a traditional venture firm in that our deal flow is data-driven. We have created the world’s largest dataset on early-stage companies and use algorithms to identify and go outbound to a small number of what we believe are the top companies across all sectors and geographies. As innovation in AI has grown and investment in the space has increased (approximately 4x from 2020 to 2023), we’ve seen our models shift to identify more companies in the generative AI space and our further discussions with these companies have confirmed our excitement for the space. We’ve already invested in companies like You.com that are bringing the power of AI technology improvements to solve people and business problems, and continue to leverage our backgrounds in AI and data science to identify and support companies with a range of AI use cases.

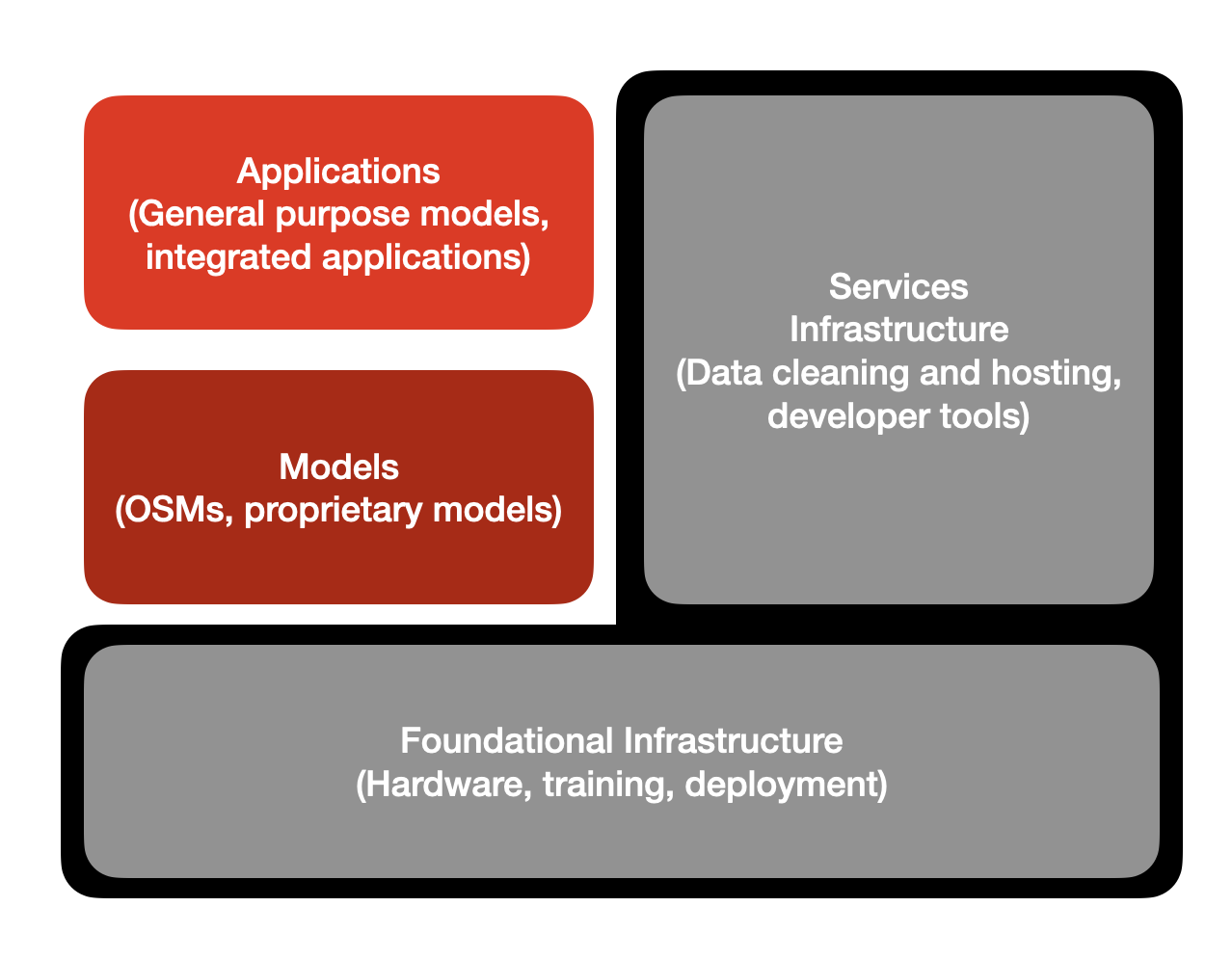

The core idea driving Rocketship.vc since our beginnings in 2014 (the same year as the first Generative Adversarial Networks that preceded many of today’s generative image models) has been the capability of data and machine learning to discover the best early-stage companies working anywhere on anything. As AI practitioners, we have been watching the development of Large Language Models (LLMs) and other types of generative AI with deep interest and are encouraged at how nearly 20 years of research and development have turned into an overnight success. Our experience and expertise, as well as our unparalleled dataset on the competitive landscape of this fast-changing space, allow us to offer a perspective that combines both a deeper understanding of the technology as well as an assessment of the business use cases that it enables. We believe that in the near term, there are three areas of potential innovation and hence investment: models, support infrastructure, and applications.

Models

The most visible area of current innovation is the development of the LLMs themselves, with progress and consumer-facing visibility of models like GPT-4 creating pop culture and investor excitement about the space. While there are several open source attempts (also called Open Source Models or OSMs) to create LLMs (Falcon 40B, LLaMA, Vicuna, Alpaca-GPT4, Baize to name a few, more here), the most popular models are still created and maintained by large companies (OpenAI, Google, Meta, Nvidia, etc.). We believe that similar to how there are lots of open source map providers which enable low cost/zero cost development and experimentation, open source LLMs will provide a very strong initial stepping stone to creating applications at very low cost. However, just as the best maps are still updated and maintained by large companies who are able to use proprietary data and associated costs to create a competitive advantage, so will similar LLMs, potentially giving proprietary model developers the ability to react to and compete directly with successful applications piloted using open source models. The opportunity for potential venture investment in core models will be constrained by which of these OSMs get significant adoption and in most cases, they will be targets for acquisition by the large model developers.

Applications

Another area with strong traction (both in public consciousness and business impact) and potential for investment is the development of applications that incorporate LLMs to solve critical business and people problems. While most existing companies are rapidly attempting to experiment with LLMs as a technology, startups are emerging that combine a deep understanding of what the technology can do with clear identification of problems that can be solved in unique and novel ways. We believe that truly category defining companies can be built here and we are beginning to see several such candidates in our models. The key for us is to ensure that the problems that the startups are solving are critical enough for their customers to pay and as a result are focused on identifying and helping startups with generating traction. We are also focused on understanding what sustainable competitive advantage application startups can create in their spaces, to avoid being squeezed by model and infrastructure providers as well as future entrants, though believe that these advantages may be understated by current conventional wisdom for those building robust products with integrated LLMs (rather than simple model wrappers).

Supporting Infrastructure

Though less visible to the average LLM user, we see support infrastructure as potentially the most diverse and largest opportunity for innovators and investors. The explosion in models and applications has created a need for infrastructure to build, maintain, update, and improve their models and to enable application developers to build more effective and reliable applications more quickly. We’ve begun to see a broad range of products in this space provided by new startups, growing companies, and large established players. In much the same way that traditional software support infrastructure has succeeded in recent years, we believe that AI support infrastructure is a potentially fruitful space for founders and investors, in part because of the ability for infrastructure companies to work in parallel to model and application providers and serve winners in both spaces. For example, model hosting and aggregation companies like Replicate and Hugging Face are able to play a platform role with a business model that can succeed regardless of which model and application companies ultimately win. In some cases (such as cloud and hardware infrastructure), we have seen support infrastructure being dominated in these early stages by large existing companies like Nvidia and Google. However, we believe these are still areas with potential for long-term disruption by new companies and we are already beginning to see strong startups appear in other types of infrastructure tools (like vector databases), but their traction is still early.

Our View for the Future

We have also begun using LLMs in our own internal work and use cases include categorization and duplicate detection to name a few. Additionally, we have been asking our portfolio companies about their strategy with regard to LLMs and have been guiding them on where best to deploy this technology first. We have already invested in You.com in 2020 as strong believers in their journey using NLP and AI to create the future of search, and are excited to partner with more companies in the space who are building the future of technology unlocked by new AI tools like LLMs.

Our team’s strong data science, engineering, and AI backgrounds enable us to provide meaningful support, insights, and advice to our founders and portfolio companies. Our partners have had a broad range of impact in the AI field, including being at the forefront of research and working on cutting edge operational implementations of new technologies:

-

Two of our partners, Anand and Venky invented the concept underlying Amazon Mechanical Turk, which has played a key role in the development of Machine Learning and Deep Learning. Both are also successful founders and operators having sold companies to Amazon and Walmart.

-

Anand also co-authored a textbook on Mining of Massive Datasets and co-teaches a popular course on the subject at Stanford.

-

Sailesh was a Ph.D. candidate in Artificial Intelligence working on applying AI to clinical trials for cancer and was part of a team that worked on higher level reasoning for a robotic assistant for the elderly.

-

Madhu worked as the Chief Data Officer of Gojek (a 29.2M user multi-service consumer platform in Indonesia worth $9.6B) and helped grow the business into a $1 billion unicorn.

-

Yichen leveraged data science and AI skills to improve detection of fraud and anomalies within the Medicare and Medicaid systems.

With these diverse experiences, both theoretical and operational, we believe Rocketship is well equipped to help founders in the AI space take their companies to the next level.

As we continue to see founders and investors focus on the generative AI space and LLMs specifically, we believe Rocketship can add differentiated value to our partners with our extended experience of applying AI and data science in the context of startups. Please reach out to us at inbound@rocketship.vc if you are working in the space and want to discuss your idea and ways Rocketship can support you.